When people start making music, the first thing they deal with is learning the DAW, the different effects like equalizer or compressor, recording, writing MIDI... But only a few people deal with the concepts of sample rate and bit depth.

And yet these are two such important aspects of the digital audio world that are not really difficult to understand - and once you do, life will be a lot easier, at least when it comes to exporting your projects. Or importing samples into your project.

Part of the blame lies with modern DAWs like Ableton Live, which make the concept of sample rate and bit depth so simple that producers don't even have to think about it - because Live automatically converts the audio files to the project's sample rate without prompting. In Pro Tools, for example, you have to actively convert samples before you can even import them.

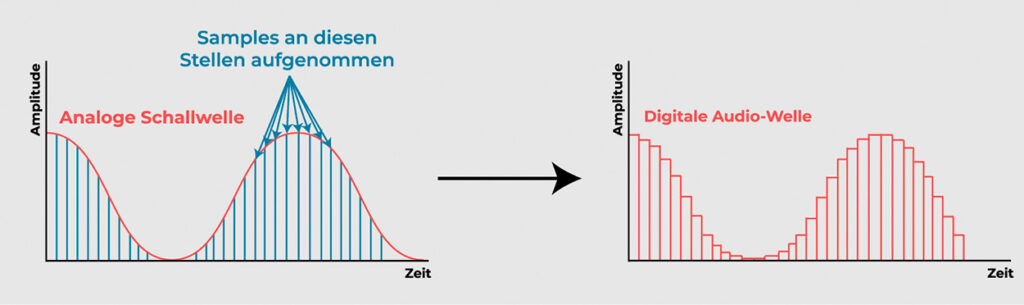

To understand the concept, we must first look at the process of converting analog audio signals to digital audio signals.

What is digital audio?

Digital audio is sound that has been recorded in a digital format. This means that the audio signal is represented by a sequence of numbers that can be stored and processed by digital devices such as computers, digital audio players, and digital audio workstations.

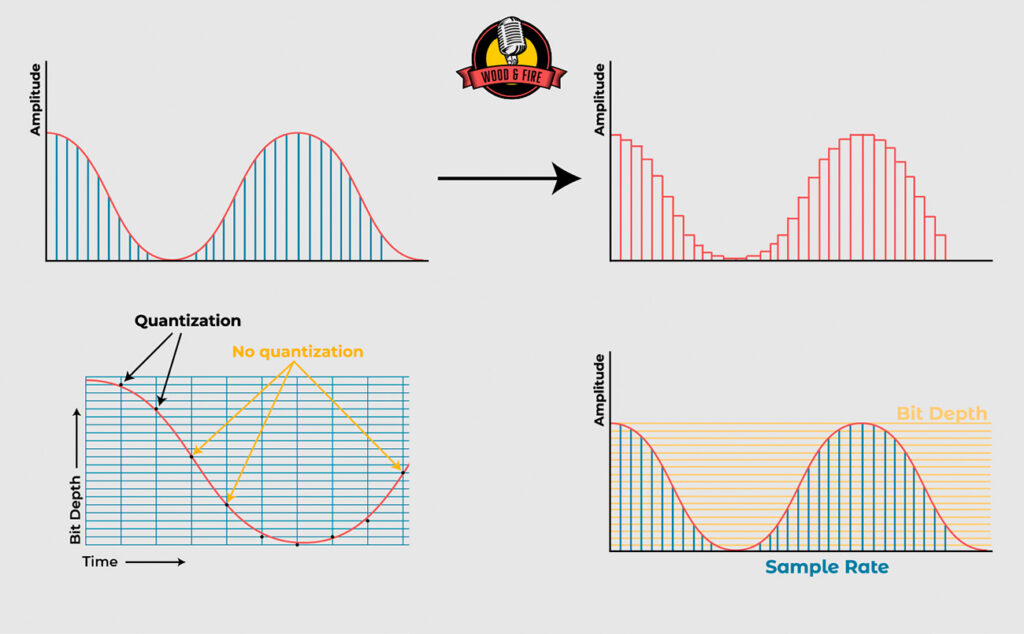

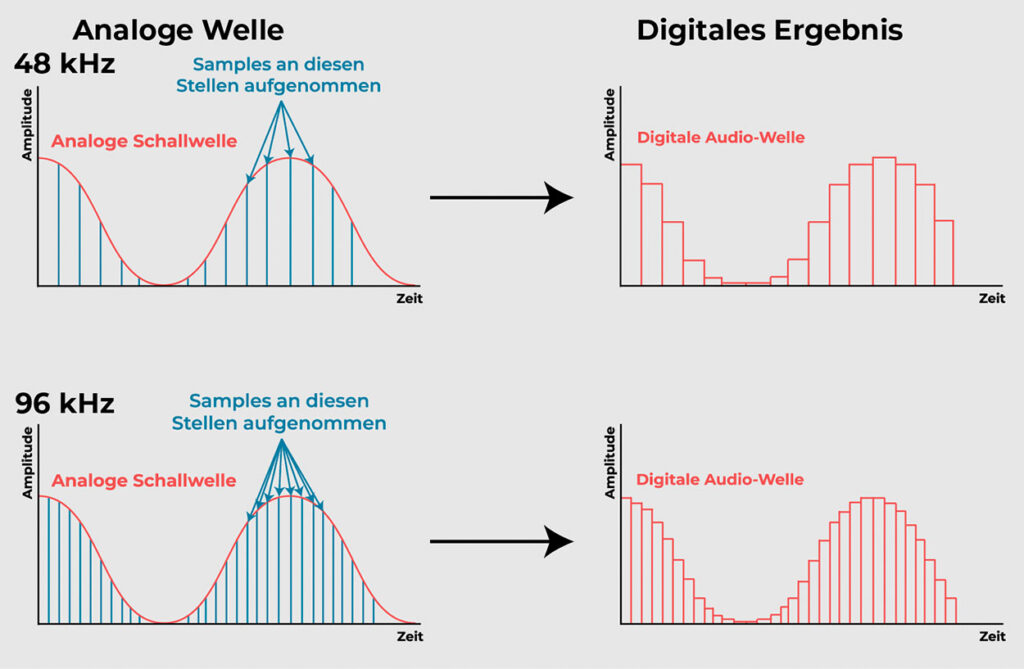

In digital audio, the sound wave of the audio signal is sampled (recorded) at regular intervals and a digital value is assigned to each sample. This process is called analog-to-digital conversion (ADC).

The quality of digital audio signals is determined by two factors: the sample rate (how many samples are recorded per second) and the bit depth (how much information is stored in each sample).

Keep reading: ADAT - All about the interface in digital audio devices

What exactly is the sampling rate?

The digital sample rate indicates how many times per second the analog signal is sampled to create a digital signal. The higher the sample rate, the more "accurately" (i.e., more often) the signal is sampled. However, it also requires more memory because more information is stored.

The sample rate is specified in kHz and can be set in your DAW. If you're connecting multiple interfaces or other digital audio devices, you'll need to set them all to the same sample rate-but most USB and Thunderbolt audio interfaces automatically adjust their sample rate to match that of your DAW.

The following sample rates are typically available: 44.1 kHz, 48 kHz, 96 kHz, 192 kHz. These numbers are not chosen at random, but for a reason.

44.1 kHz

This sample rate was chosen for the Compact Disc (CD) format in the late 1970s. The reason for this choice is a bit complicated. It has to do with the highest frequency that can be accurately reproduced (the Nyquist frequency, which is half the sample rate).

The highest frequency most people can hear is about 20 kHz, so a 40 kHz sample rate would theoretically be sufficient. However, a filter is used to avoid aliasing, and this filter is not perfect - it needs a transition bandwidth. Therefore, 44.1 kHz is chosen instead of 40 kHz to give the filter some room.

The video technology of the time (used in the mastering process for CDs) had a standard of 13.5 MHz, and 44.1 kHz is 1/294 of that frequency, which made it a practical choice that met the technical requirements.

48 kHz

This sample rate has become the standard for audio in professional video production, including digital television, digital video, DVD, and digital film sound. The exact reason for choosing 48 kHz is not entirely clear, but it is likely that it is a higher frequency that still allows for a reasonable amount of data storage and processing power, and provides slightly more room for anti-aliasing filters than 44.1 kHz.

96 kHz and 192 kHz

These are simply multiples of 48 kHz and are used in high-resolution audio formats. The idea is that higher sampling rates can reproduce higher frequencies and provide a more accurate representation of the original analog signal. However, it is debatable whether these higher frequencies are actually audible to the human ear.

Also interesting: The 8 best microphones for rappers and singers in 2023

Which sample rate is the right one for me?

Choosing the right sample rate depends on several factors:

- 44.1 kHz: If you are producing music for CD or your recordings are primarily for streaming on the Internet, 44.1 kHz is a good choice. This is the standard sampling rate for CDs and most online music distribution platforms.

- 48 kHz: If you are producing audio for video, 48 kHz is the default sample rate. This is also a common sampling rate for podcasts and audiobooks.

- 96 kHz or 192 kHz: If you are producing high-resolution audio, such as for Blu-ray or special high-end audio releases, you may want to consider one of these higher sampling rates. However, recording in these formats requires so much memory and processing power that it's not worth it for most applications.

| 44.1 kHz | 48 kHz | 96 kHz | 192 kHz |

|---|---|---|---|

| 100 MB | 109 MB | 218 MB | 436 MB |

| 500 MB | 545 MB | 1.09 GB | 2.18 GB |

| 1 GB | 1.09 GB | 2.18GB | 4.36GB |

I recommend all music producers and sound engineers to always work in 48kHz because it is a good compromise between quality and computing power. Theoretically, 44.1kHz is perfectly sufficient, because CDs and Spotify & Co. run in 44.1kHz anyway, but you are simply more flexible, because you can always downsample from 48kHz to 44.1kHz later - the other way around is not possible.

Can you hear the difference at higher sampling rates?

This is a very controversial topic in the audio world - the fact is that the human ear can only hear up to 20 kHz anyway, and 44.1 kHz is perfectly adequate. In some cases, higher sample rates can be advantageous in audio processing, but in the finished mix there is no audible difference to 44.1 kHz.

Is it worth using higher sample rates?

In certain cases, it can be worthwhile, but I would not recommend recording a complete, large project at 192 kHz because the storage requirements would be enormous. But there are certain cases where it can actually be advantageous.

Normally, when a 44.1 kHz sample is pitched down, most of the sample's high frequencies are lost, since above 22.1 kHz there is no information left to pitch down to fill the new gap in the highs anyway.

However, if the sample has a sampling rate of 96kHz, it contains information of up to 48kHz - and even more high frequencies are preserved when the sample is pitched down, as these "ultra-high" frequencies then fill the 15-20kHz range.

What is the bit depth?

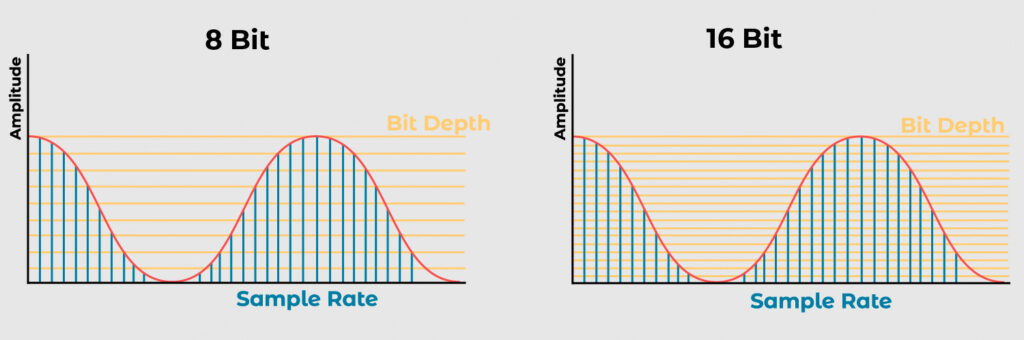

The bit depth indicates how many possible dynamic values can be recorded for each sample. The higher the bit depth, the more accurately the dynamics of the song are recorded. It measures the resolution or accuracy with which the original analog audio is being digitized.

In practice, the most common bit depths in digital audio recording are 16 bit and 24 bit.

- 16 bit (65,536 values): This is the standard bit depth for CDs and many digital audio formats. A 16-bit bit depth allows for a theoretical dynamic range of about 96 decibels (dB). This means that the loudest signal that can be recorded is about 96 dB louder than the softest audible signal.

- 24 bits (16,777,216 values): This is the standard bit depth for professional audio recording and some high-resolution audio formats. A 24-bit bit depth allows for a theoretical dynamic range of about 144 dB, which is far beyond what the human ear can actually hear. This means that more detail can be preserved in the quiet parts of a recording that you might not hear directly, but only when you turn up the volume. This gives you more leeway when editing and mixing - you don't have to record as "loud" as you used to because the noise is so low.

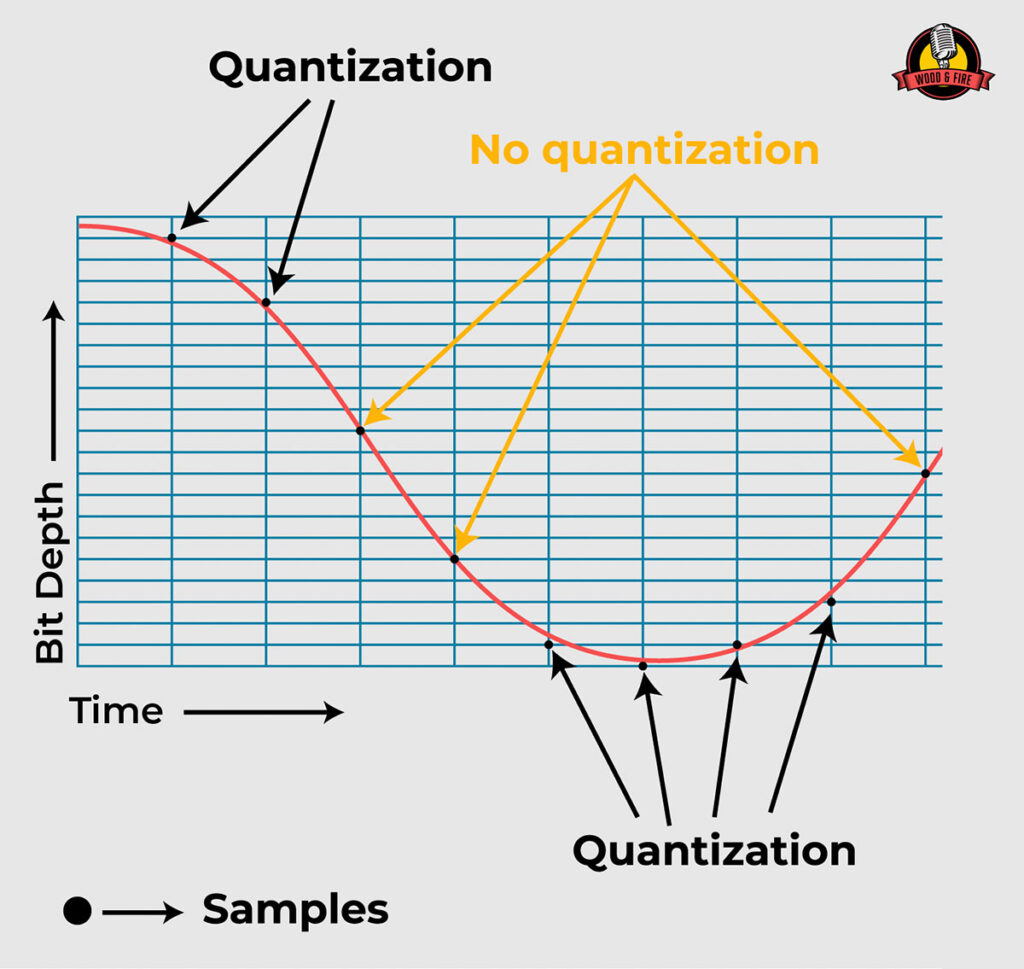

The problem, however, is that the digital wave can never be 100% the shape of the analog wave, regardless of bit depth. When we convert real sounds to a digital format, we try to capture a smooth, flowing sound wave with a series of individual values. But sometimes the exact value we need is not available, so we have to round down to the nearest value. This process is called quantization.

This rounding off introduces a little bit of randomness into the digital sound. We hear this randomness as a very soft background noise, similar to the soft hiss you can hear in a quiet room. This is called background noise.

Sometimes the way we sample the sound can create repeating patterns in the noise that make it more noticeable at certain frequencies. This is called correlated noise.

To avoid these patterns, we can add an additional random variable when rounding the values. This process is called dithering and it helps spread the noise more evenly and make it less noticeable.

The level of this background noise determines the quietest sound we can record - because our signal must always be louder than the background noise to keep it clean.

On the other hand, there is a limit to how loud we can record before it starts to distort. This area between the softest and the loudest sound is the dynamic range mentioned earlier.

What kind of bit depth do I need?

24-bit is very convenient for recording because you don't have to worry about levels. The dynamic range is so wide and the noise floor is so low that you can easily record at -15dB because you can just add a lot of volume later. And you make sure that the signal is not distorted.

In the past, with 16-bit recorders, you always had to be careful to record as loud as possible so that the background noise was not audible - and then there was always the risk of clipping. With 24-bit, this problem no longer exists.

So you should always record and work in 24 bit, but when the song is mastered, you should export the final master in 16 bit as this is the standard for CDs and streaming services.

Can you hear the difference between 16 bit and 24 bit?

No, you can't hear the difference when you listen to a finished mastered track. There are very few pieces of music that have a dynamic range greater than 96dB (maybe classical music), so it doesn't make sense to offer a larger dynamic range anyway.

Finished pop, rock, R&B, hip-hop, and country music tends to have a relatively modest dynamic range - typically around 10 dB - so theoretically 8 bits would be sufficient. This is partly because the music is heavily compressed during production (with compressors or limiters), which reduces the dynamic range of the song.

This is why all streaming services still use 16 bit - and of course for space reasons. The only advantage of 24-bit over 16-bit is the increased dynamic range, which results in a lower noise floor during recording.

So what is the best combination of sample rate and bit depth?

For music production, a sampling rate of 48 kHz at a bit depth of 24 bits is recommended as a good compromise between quality and file size. This gives you a very wide dynamic range to work with and a virtually unlimited frequency range up to 24 kHz - far more than we can actually hear.

This gives us all the options we need to export to any media later - and our final audio file that we take to the streaming platforms should be 44.1 kHz at 16 bits. That's the format that streaming services and CDs expect.